Continuing the discussion from SIG Repos: How should they work?:

This is an important problem, and I think we can describe it as the “shared inputs” problem. It is the reason I think Aux can’t go fully distributed, at least right now. We have to have some form of a monorepo to coordinate shared inputs.

I wanted to use this thread as

- reference of what the problem is

- why its hard

- how we might one-day avoid the need for a monorepo

.

I’ve been working on this problem for a while (I feel like I say this about everything, but I swear they’re all interconnected). I was talking with Graham Christensen (flakehub core guy) and told him “look for my post next week”. That was almost 6 months ago ![]() , I still have yet to send this post to him because I keep refining it, but I think its worth posting here.

, I still have yet to send this post to him because I keep refining it, but I think its worth posting here.

.

My Proposal to Flakehub (Solving Shared Inputs)

I know it doesn’t look like it, but I’ve tried to make this as short as possible.

I would like to start pulling tons packages out of nixpkgs, making them standalone, generating a git-tagged version history for them, all before publishing them to flakehub. But, I feel that I shouldn’t because of a design issue.

The Problem: Shared Inputs

Lets establish a little example for context.

- Person1 publishes a numpy flakehub package

- Person2 publishes pytorch flakehub package

- Person3 is a user trying to use python+numpy+pytorch

All three of the packages (theoretically) take glibc as an input, and they all need the share the same glibc. They can’t each have their own version, at least not reliably. Finding a version of glibc that meets the needs of all three derivations is what I call the shared input coordination problem. Glibc is just one example, as this problem happens with many upstream dependencies (including effectively all python libraries).

The Nixpkgs monorepo solves this problem manually. E.g. if we use one nixpkgs commit for both torch and numpy, then the maintainers already did the work of finding a glibc version that worked for both. The manual approach has problems, as it burdens the maintainers a lot, and it is inflexible with package versions (one torch+numpy combo, can’t necessarily update one or the other). However, it kinda works.

Flakehub is radically different. Right now the burden of the shared input problem falls squarely on the user. Flake locks don’t say what inputs COULD be, they only say what inputs default to. When it comes to finding a shared input, AFAIK users have one and basically only one strategy; guess-and-check until it works. Once a project grows to 100 pip dependencies that all need various libpng, ffmpeg, cuda, and glibc versions, it becomes humanly unsolvable.

Expectations

To keep things grounded and tangible, I want to start with a sneak preview of the conclusion. I will be proposing for flakes on flakehub to include an additional output attribute.

- It will be win for me if I can get feedback/iteration on the design, and (eventually) get a thumbs-up from the Determinate Systems team.

- Step one is a standalone goal. That said, the hope is the design becomes a requirement for publishing on flakehub.

- I’d like to say this third goal is “out of scope” because it is important to simply focus on the first two. However, we wont get the full benefit without this third step; search tools. This could be an incredibly foundational and unique service for flakehub to offer. The tools are going to be really fun to design, like a leetcode optimization problem with tons of room for performance tricks with real world benefit. While the “extra flake attribute” I’m proposing needs to be a good format for the search tools to ingest, beyond that aspect the search tools themselves are not the focus of this post. This article is about eating our veggies, making the problem solvable, so that later we can get to the meat and potatoes of the search tools that finish the job.

Now that you know what I am aiming for, lets start eating our veggies.

“Best” Solution(s)

Nix’s shared input coordination problem is like trying to – simultaneously – jump-rope, juggle, and play hop-scotch. Nix is used for so many things, basically any change anywhere has negative implications for some task somewhere. I’ve been designing, testing, trashing, iterating and revising solutions for the last couple years, and I think I finally have something that

- prevents unfixable future problems

- is practical to implement and

- minimizes rough spots

Like a Rubik’s cube that’s been mislabeled, I think it’s provably impossible to maximize every side of this problem. There is no perfect solution but I do think there is one that stands above the rest.

Problem Components

Conceptually, there are two sides to shared-inputs

- A flake-publication needs a way to say “my output can satisfy X,Y requirements”. I’m going call these certificates. For example, python2 and python3 might both “provide” the python certificate.

- On the other side, flake inputs need a way to say “my input needs to meet some combination of (‘X or Y’, ‘X and Y’, etc)”. I’m going to call these requirements.

Requirements and certificates are two sides of the same coin. For that reason I’d like to call this the req-cert system.

If we encode these correctly, we should be able to throw our best automated solvers at it (instead of the caveman guess-and-check approach). Getting this encoding correct is the top priority.

Note: I’m going to talk like every flake has only one derivation because its easier that way. We can address multi-derivation flakes with the same approach.

Step 1: Certificates as Strings

Similar to solving a Rubik’s cube for the first time, I’m going to approach one side/color at a time. Meaning, hold criticisms, because we’ll revisit “solved” steps and enhance or replace them. I think it’s easier to read this way.

Think about publishing a python flake. For python, a publisher might say

certificates = [

"python"

"v3.10"

"CPython"

"python-minimal"

"python_full"

]

On the other side, something that needs python as an input, might want to say:

# "CPython" AND "python_full"

# OR

# "Jython" AND "python_full"

python_requirements = [

[ "CPython" "python_full" ]

[ "Jython" "python_full" ]

]

Each string is effectively a boolean check; either the input has “CPython” in its certificates list or it does not.

Already, this design means:

- Some kinds of requirements can’t be made

- There is a complexity (e.g. size complexity) concern

- There’s a usability/ergonomic concern, and

- The input requirements structure isnt really defined yet

Lets continue eating our veggies and address each of these.

Step 1 Problem 1: Versions

You might have noticed the system isn’t graceful at checking versions. E.g. what if we just want to require the python input to be version 3 but not python 3.5. For now, lets try addressing that with as minimal of a change as possible.

certificates = [

"python"

"v3"

"v3.10"

"v3.10.5"

]

It’s ugly, doesn’t allow true version-range checks, and we will come back to this. But for now, this pattern handles at least the common cases: We can now require something as broad as “v3” or something as specific as “v3.10.5”.

Problem 2: Arbitrary Strings

Consider hundreds of devs, of all ages and backgrounds, who don’t know each other, who don’t have time to talk, but they need to coordinate as a group through an API. An API that they don’t control.

- Unfortunately for me, I am already in the midst of this problem when working on syntax highlighting grammars.

- Fortunately for you, being in that situation has caused me to spend excessive hours devising ways to avoid it.

Both our step 1 solution and the textmate syntax highlighters use an API of anything-goes-strings.

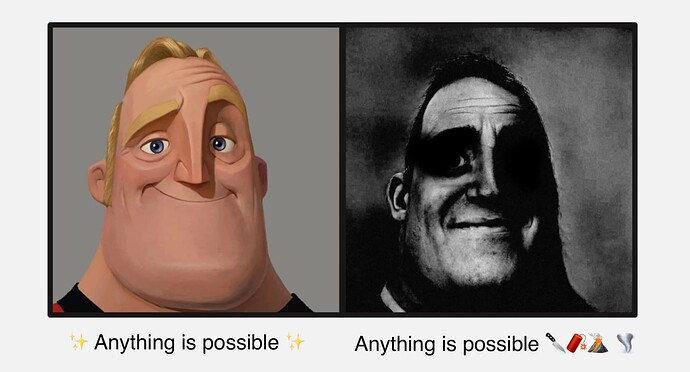

Sadly, the dark half of “anything is possible” is not only more common, but, specifically here, it increases in dominance over time.

We’ve got to keep eating our veggies, and get on the same page of why and how this problem happens.

Let’s go to exhibit A; VS Code. When VS Code looks at text, like the this keyword in javascript, each word is given list of tags. For example this might have the tags [ “variable” “special” “object” ]. This is very similar to each flake having a list of certificates. Zooming out, that list of tags is created by … someone … somewhere. Lets say tags are created by people-group 1. Everything is fine. Well… except the text in VS Code still has no color, there’s just a bunch of words and tags. That’s where people2 come in. People2 are the theme makers. Themes say “words with the variable tag get colored red, and words with the special tag get colored blue” etc. These rules are very much like input requirements of a flake; two sides of one interface.

Okay, so words get colors. Sounds good right? Maybe.

The thing is, people1 don’t coordinate with each other. Variables are NOT tagged with “variable”. They are tagged with “var” in one language, “variable-name” in another language, “varName” in another, and “identifier” in yet another. On the flip side, not only do people2 need to know every single var-name name (just to highlight a var!) but also there is no organized place for people2 to even find those var-name names! They have to just… look around in random codebases and kind of guess.

But it gets worse. The SAME tag can mean different things in different languages. Identifier in Prolog is very different than identifier in Javascript.

And it gets EVEN WORSE. New tags (from new languages) start with zero support. People2 (themes) already struggle with finding names, so how can they possibly know names of niche recently-released tags? People1 would need to make a PR on each of People2’s codebases, which is beyond impractical.

But devs aren’t the kind to say “I can’t do it the correct way … guess I’ll just … give up”. So what do niche languages (like nix) do? Easy. They intentionally miss-label everything. Theme’s don’t know about col1 and col2 tags for csv? Just call col1 variable and col2 a keyword. Theme’s don’t know what a prolog predicate is? Just say its regex. Theme’s don’t know what an atom is? Just tag it like an html attribute.

The truly worst part is; this can’t be fixed later.

Unfixable, intentionally wrong requirements/certificates would a very very very bad outcome for flakes. How can we avoid this?

Step 2: Naming Hubs

We are trying to juggle and play hopscotch, we want to avoid this naming problem but keep the decentralized nature of nix.

Lets rework the python example:

certificates = [

"nixos.org:name:python"

"flakehub.com:version:3"

"flakehub.com:version:3.10"

"flakehub.com:version:3.10.5"

"flakehub.com:feature:cpython"

"flakehub.com:feature:python-minimal"

"flakehub.com:feature:python-full"

"unstandarized:blazingly-slow"

]

Looking unwieldy? Maybe. But, we must first address cant-be-fixed-later then unwieldyness.

The example above is an outcome of the following string restrictions:

- Strings need a namespace, a colon, and then text. Everything is lower case.

- Normal namespaces need to follow URL syntax restrictions (the syntax after the “https://” or “file:///”). The namespace doesn’t need to be a real URL, it just needs to follow the syntax rules. The idea is; the namespace could correspond with a website that has an exhaustive lists of terms, patterns, conventions, etc.

- The text after the namespace could be very restricted, maybe something like words composed of lowercase letters, digits, with dashes inbetween then with colons inbetween words. Or for regex ppl

/(:[a-z0-9][a-z0-9\-]*(?<=[a-z0-9]))+$/ - Note: being overly-restrictive is fine because its a can-be-fixed-later problem. We can ALWAYS allow more chars in the future, but not vice-versa. These restrictions keep lots of room available for future special/official namespaces. For example, “optional” or “system” could be special namespaces. I’ll come back to these.

- The “unstandardized” namespace (at the bottom of the example) is more of “whats possible” example not really a “whats recommended”. Its a special namespace (not following url syntax); a designated area for hacky/temp/tinkering requirements, like someone who made a package for the first time.

There is one big note needed here. Restrictions must be enforced ONLY at publication time not at download time. For example, nix-env can’t download a flake and ASSUME that all requirement-strings contain a colon. This is because a flake from the future might (valid-ly) contain no-colons as part of some future-feature. If nix-env and other tools assume these requirements, we will be unable to solve future problems.

Back to namespace hubs. I think this design will prevent the no-standards duplicate naming and intentionally wrong naming issue. Moving on, we’ve got a lot to cover.

Problem 3: Data Structure Logistics

Where would these certificate/requirement lists go?

Our biggest design restriction is probably that requirements/certificates need to be serialized when publishing. Without that we can’t add them to a search engine, build an efficient index, and discover packages that meet certain requirements.

At first glance, this structure seems nice:

{

inputs = {

python = {

requirements = [ "v3.10" ];

};

};

}

It’s serializable and has tight coupling (change an input, and it’s obvious the requirement also needs to change). However, it falls apart quickly:

- Requirements can be in groups, e.g. (python310 and glibc68) OR (python311 and glibc99)

- The unwieldy-ness problem can usually be solved with imported tools. But importing tools in the

inputsarea is itself unwieldy. So we can’t choose this design if we want to cut down on boilerplate later. - If there are different requirements for different operating systems, this approach just fails

- If there are different fallback behaviors (e.g. torchWithCuda, torchWithoutCuda) there’s no way of handling it

Using outputs instead of inputs is not problem free.

- Having requirements as outputs makes it look like requirements can be dynamically computed at install time. Which is not true; they need to be serialized/constant at publication time.

- Theres also the risk that people accidentally require building the derivation in order to generate the certificates/requirements. This would make checking the requirements/certificates prohibitively expensive.

Info could be stored outside of the flake.nix, but that has obvious usability downsides.

So, all options considered, I think we can minimize the downsides of using outputs, and have it be the best approach. When publishing to flakehub, we simply evaluate the requirement-attribute of the output, try to serialize it, and give helpful messages when it’s not serializable. Ideally this would be a flake-lock step, but doing it at publication time should suffice.

Step 3: Data Structure v1

The following structure is closer to viable.

{

inputs.python.url = http://somewhere.com;

outputs = { python, ... }:

{

requirements = [

{

python = [ "python" "v3" ];

}

{

python = [ "python" "v2" ];

}

];

certifications = {

packages.x86_64-linux.default = [ "numpy" "v1" "v1.25" ];

packages.x86_32-linux.default = [ "numpy" "v1" "v1.25" ];

packages.aarch64-linux.default = [ "numpy" "v1" "v1.25" ];

};

}

;

}

That translates to “I prefer python v3 but can fallback on python v2”, and “I provide numpy 1.25 for linux”.

Yes, clunky, lets address functionality first though.

Problem 4: Optional Certificates

- Can requirements be a function of the OS?

- Can which-input-requirements-are-met impact which certifications are provided?

For # 1, yes, there are certainly times where the OS matters for requirements. Some OS’s lack some builtin tools, and they effectively need an additional package to compensate.

For # 2, it seems rare. However, I think there’s two important scenarios. First is feature flags. If there is only one flag, such as “cuda”, then its not too big of a deal to have two packages like torchWithCuda and torchNoCuda. But, with more than one flag, it starts to become catastrophic. Given 5 boolean feature flags, we need (2^n) 32 packages, e.g. openCvWithCudaVfpv3NoNeonVsx, openCvWithVfpv3NoCudaNeonVsx, openCvWithNeonCudaNoFpv3Vsx, etc. Thats just one scenario. The second is that packages often provide an extra-feature for a specific system. So the answer is “yes” which input requirements are met could affect which certificates are provided.

How can we make a datastructure that supports both of these needs?

Step 4: Data Structure v2

The following structure is much closer to viable.

{

inputs.os.url = https://os-requirements-placeholder.com;

inputs.python.url = https://somewhere;

inputs.optionalCuda.url = https://trickery;

outputs = { os, python, optionalCuda, ... }:

{

requirementMapping = [

{

inputs = {

os = [ "linux" "x86" "64bit" ];

python = [ "python" "v3" ];

optionalCuda = [ "v11" ];

};

outputs = {

packages.default = [ "numpy" "v1" "v1.25" "cuda" ];

};

}

{

inputs = {

os = [ "linux" ];

python = [ "python" "v3" ];

};

outputs = {

packages.default = [ "numpy" "v1" "v1.25" ];

};

}

{

inputs = {

os = [ "mac" ];

python = [ "python" "v2" ];

};

outputs = {

packages.default = [ "numpy" "v1" "v1.25" ];

};

}

];

}

;

}

Which translates to “if cuda is available, and I’m on linux, then I offer the cuda-enhanced certificate. Otherwise, for theses other systems, I just offer the normal numpy stuff”.

To be clear:

- flakes keep their normal output (the

packages.${system}.default). osis not magic. Its a normal input, that is usuallynull, and will never besystem. It’s not a hard-coded name, it is not a required input for building a derivation, nix will treat it as any other input. os will effectively always be null, which I know sounds weird, but the purpose is for metadata. Only the publish step will treat it differently, which I’ll explain some other time because its mostly irrelevant.- In this design, the requirementMapping is checked from top to bottom. If a set of requirements is met, then the remaining requirement mappings are not even checked. This can lead to situations like accidentally putting

system = [ "linux" ]first, and thensystem = [ "linux" "64bit" ]second. The second case will absolutely never be evaluated since its a subset of the first case. Thankfully, this kind of accidental-superset can often be detected automatically at publication time, so we could at least warn the user on obvious cases.

Problem 5: Feature Combinatorics

There’s still at least one functional problem. If something like ffmpeg or opencv has 30 boolean feature flags, then the data structure above will need 2^30 entries; one for every possible certificate-output combination. Thats a problem.

Step 5: Data Structure v3

We can get around this with horn clauses. Or, for those who don’t read first order logic textbooks, conditional statements with a single consequence. The structure should read like “you’ll get the cuda certificate IF you meet one of the following requirement sets”.

If a requirement set is empty, that means there is no requirements at all.

{

inputs.os.url = https://os-requirements-placeholder.com;

inputs.python.url = https://somewhere;

inputs.optionalCuda.url = https://trickery;

outputs = { os, python, optionalCuda, ... }:

{

certificateMapping = {

"nix.org:feature:cuda" = [

{

os = [ "nix.org:os:linux" "nix.org:cpu:x86" "nix.org:bitness:64bit" ];

optionalCuda = [ "nix.org:version:11" ];

}

];

"nix.org:pkg:numpy" = [ {} ];

"nix.org:version:1" = [ {} ];

"nix.org:version:1.25" = [ {} ];

"nix.org:version:1.25.1" = [ {} ];

"minimum" = [ # <- special name

{

os = [ "nix.org:os:linux" ];

python = [ "nix.org:pkg:python" "nix.org:version:3" ];

}

{

os = [ "nix.org:os:mac" ];

python = [ "nix.org:pkg:python" "nix.org:version:3" ];

}

{

os = [ "nix.org:os:linux" ];

python = [ "nix.org:pkg:python" "nix.org:version:2" ];

}

{

os = [ "nix.org:os:mac" ];

python = [ "nix.org:pkg:python" "nix.org:version:2" ];

}

];

};

}

;

}

This translates to “if you want cuda acceleration, then I need linux x86 and cuda 11, here’s my normal certificates (numpy v1.25.1) they don’t require anything, btw you need at minimum linux/mac with python 3 or python 2 to build me”.

The special “minimum” certificate just means the package will not build at-all without those requirements.

This structure does not suffer from the combination explosion.

If you are thinking “well that handles a certificate explosion, but what about a requirement explosion?” then don’t worry, that is not really a thing. Certificates are not symmetrical with requirements in that way. For certificates (outputs) we needed both a feature-flag=true case and feature-flag=false case to be in a list. But for requirements (inputs) we only need the true case. We don’t say “this (numpy) package does NOT need Gnome, and does NOT need Haskell, and does NOT need Lisp, etc” we only list what IS needed, which avoids an exponential explosion.

Problem 6: Ergonomics

Finally, we can start simplifying.

{

inputs.requirementTools.url = https://requirement_tools.com;

inputs.os.url = https://os-requirements-placeholder.com;

inputs.python.url = https://somewhere.com;

inputs.optionalCuda.url = https://trickery.com;

outputs = { requirementTools, os, python, optionalCuda, ... }:

{

certificateMapping = (

let

inherit (requirementTools) pkg oneOf allOf OS feature build optional;

in

requirementTools.build pkg {

name = "numpy";

version = "1.25.3";

requiredInputs = oneOf [

{

python = pkg {

version = oneOf [ 3 2 ];

features = allOf [ "CPython" ];

};

os = oneOf [

(allOf [

(OS allOf [ "linux" "64" ])

(OS oneOf [ "x86" "arm" ])

])

(allOf [

(OS allOf [ "darwin" "64" ])

(OS oneOf [ "x86" "arm" ])

])

];

}

];

features = [

(optional {

value = feature "cuda";

requiredInputs = {

optionalCuda = pkg { name = "cuda"; version = "11"; };

os = OS allOf [ "linux", "x86", "64" ];

};

})

];

}

);

}

;

}

I’m sure there’s more ergonomic alternatives, but that’s just a rough example of how massively different the API can be, while still using the structure and name-hubs system under the hood for maximum flexibility.

Big Picture

Lets put everything together

- Someone makes a flake

- They have a requirementTools input

- They define a

certificateMappingattribute on their flake output - They trigger the github action to publish to flakehub

- The github action evaluates

certificateMappingattribute, checks if naming standards are followed, the serializes it and pushes to flakehub.org - Flakehub.org probably does some kind of indexing/cache-building.

- Some time later, like a week later, someone tries to solve a shared-input problem.

- They have list of inputs (for example project dependencies), along with a set of requirements (like the project needs SQL 3.2.1), and an attribute summarizing their OS/machine

- They serialize that info, send it to flakehub and ask “what inputs would satisfy these requirements?” (E.g. list of flake url’s and versions)

- Flakehub solves this recursively, such as finding that input1 and input2 share a dependency, and finding a version of that dependency that meets input1’s requirements, input2’s requirements, and the project’s requirements. It then returns both a list of viable input versions/combinations, and also the overrides necessary to force input1 and input2 to share the same upstream dependency.

- The user receives the response from flakehub. They use the first answer as their project inputs and … the project still might not build. But! There is a list of viable inputs, and after trying a couple more the project actually builds.

Final Design Aspect: Performance

Last bit of veggies that we need to eat; I said I’d come back to version ranges, and really it is one of many lingering things:

- malicious packages that claim to have certificates for everything

- some packages have constraints but don’t need to be shared (input1 needs python2 and input2 needs python3, they don’t need to share the same python)

- paired dependencies. Ex: deno_core_123 and deno_networking_123

- stacked dependencies. Ex: I need numpy built-using-clang-12-as-an-input, not numpy built using gcc 10 as an input (assuming that “built with clang 12” is not provided as a certificate by numpy)

- The valid-ish need of dynamic certificates, and the complex consequences of trying to support them

There is one hidden aspect I’ve been designing for all along; performance. Going back to jumping rope, juggling, and playing hop-scotch, the final boss is doing all-that at 100mph on top of a train named “performance”. We/flakehub need to be able to solve shared inputs quickly, despite it being NP hard.

It was no accident that, at the beginning, we used strings like boolean-vars.

The boolean SAT problem is also NP hard (NP complex). But people have spent a whole lot of time optimizing SAT solvers. Our shared-inputs is not the same, but it is close. Between boolean SAT and the graph coloring problem, there are a lot of clever tricks we can borrow to optimize the heck out of the shared inputs problem. Even just replacing the strings with bits in a bit array to leverage xor can be a practical and major non-algorithmic speed up.

What does any of that have to do with version ranges?

Well the design already has room to support version ranges (and a bunch of other stuff). For example, a special “nix.org:pkg:[name]:min:1” and “nix.org:pkg:[name]:max:3” requirement, But I think we would loose a ton of performance – possibly so much that it becomes impractical for flakehub to even offer the solver as a service.

There might be a way to support version ranges, but I think we need to get a performant solver first, then see if performance can be maintained while adding true version ranges.

As for stuff like malicious packages, I think there are practical solutions, solutions that belong in a different discussion. This post is already super long.

Conclusion / Actionable Steps

Going all the way back; I’m looking for feedback. That is the next actionable step. Maybe there are aspects I missed, or a completely different way of approaching the problem. Either way, I really want to start making flakehub packages but feel I shouldn’t until there is a structured way to express requirements on the inputs.